How to change default serializer on Apache Spark Shell

By default, Spark uses Java Serialization which is recognized as bad solution for the processor and memory usage on Serialization and Deserialization besides the low compression on generate bytes.

So I’m using Kryo instead of Java’s default Serializer. To be able to test your code on spark-shell you just need to set the parameter to KryoSerializer

$ export SPARK_JAVA_OPTS="-Dspark.serializer=org.apache.spark.serializer.KryoSerializer"

$ ./bin/spark-shell

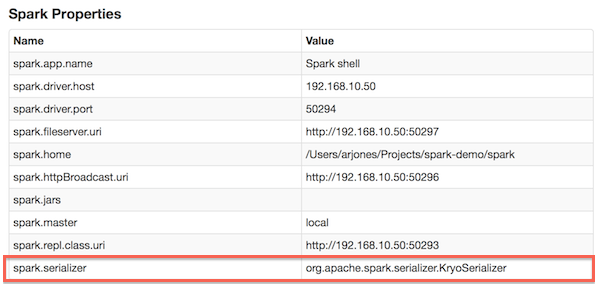

You can check if configuration was correctly applied browsing Spark’s Environment Web UI: