Tips & Tricks to migrate MySQL between datacenters

Most of our data are stored on MySQL and Cassandra, MySQL was the primary data-store when we started up the company. Currently our MySQL workload is located at AWS RDS and we would like to give a try to Microsoft Azure. This writing is to document a few tricks we learned to reduce the total time of dump, transfer and restore. Hope it can help you too.

##

Acknowledgement

It was a team effort and we wouldn’t be able to successfully complete it without Pablo Romanelli and Emiliano Perez efforts and dedication. A lot of we accomplished and learned together was based on other posts and I tried to give all links and credits.

##

Preparation

We were pretty impressed about Azure performance and support to opensource software, I was biased about Azure thinking it would only work for Microsoft Servers or .NET workloads, it was a nice surprise see it plays nice with FOSS.

Although it still doesn’t have all the AWS features, one that we missed on this point was a MySQL Managed Service as RDS. So we had to build our own MySQL server from scratch.

Besides that our work was pretty straightforward, represented by this steps:

- Synchronize RabbitMQ queues between datacenters

- Stopped the writing process at origin (data kept been published by the producers to a queue)

- Dump the database

- Transfer the backup between datacenters

- Retore it in the new MySQL

- Consume the new queue from the destination RabbitMQ which kept data synced

##

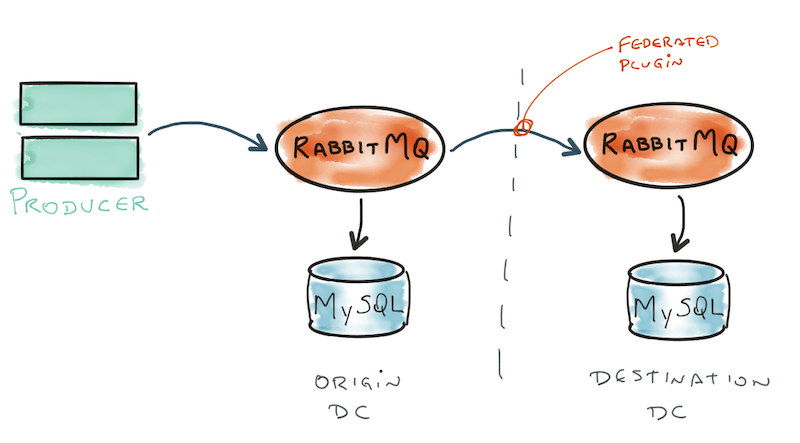

Copying payload from DC-to-DC

Luckily our system communicates through RabbitMQ, using a Message-Passing pattern, so we have been able to use RabbitMQ Federation Plugin to keep ingesting and processing data in AWS while replicating it to Azure.

Of course, it is important RabbitMQ machines must be beefy because messages will start to accumulate and pressure to the system will be generated. We were sure we could keep up with data ingestion rates during the migration.

##

NOTE:

We are interested in replacing RabbitMQ for Kafka which would be much easier to migrate and no pressure would be generated on the system. This is for another writing ;)

##

Dump the Database

Once all writing processes have been stopped, we can start a dump. Those options were we achieve best performance on both dump and restore, we assume you’re using InnoDB storage engine.

This blog post gave us several ideas: Smarter, faster backups and restores of MySQL databases using mysqldump – and other useful tips

#!/usr/bin/env bash

set -e

DB_NAME=$1

DEST_DIR=$(pwd)/dump

echo "SET AUTOCOMMIT=0;" > ${DEST_DIR}/${DB_NAME}.sql

echo "SET UNIQUE_CHECKS=0;" >> ${DEST_DIR}/${DB_NAME}.sql

echo "SET FOREIGN_KEY_CHECKS=0;" >> ${DEST_DIR}/${DB_NAME}.sql

mysqldump -u${MYSQL_USER} -p${MYSQL_PASSWD} -h${MYSQL_HOST} --add-locks --create-options \

--extended-insert --lock-tables --quick --compress --compact --disable-keys \

--routines --triggers ${DB_NAME} >> ${DEST_DIR}/${DB_NAME}.sql

echo "SET FOREIGN_KEY_CHECKS=1;" >> ${DEST_DIR}/${DB_NAME}.sql

echo "SET UNIQUE_CHECKS=1;" >> ${DEST_DIR}/${DB_NAME}.sql

echo "SET AUTOCOMMIT=1;" >> ${DEST_DIR}/${DB_NAME}.sql

echo "COMMIT;" >> ${DEST_DIR}/${DB_NAME}.sql

# -3 yield the better balance between compaction and data transfer time spent

gzip -f -3 ${DEST_DIR}/${DB_NAME}.sql

# A remote job can check if the dump/compress finished correctly

touch ${DEST_DIR}/${DB_NAME}.SUCCESS

##

Be notified when the dump process completed

Some of ours databases are big (> 100+Gb) and the dump process can take several hours. So we came up with a simple notification script that check for a file and start transferring data when it is found:

STATUS="WAIT"

while [ "${STATUS}" == "WAIT" ]; do

REMOTE_COMMAND="[ -f /opt/dumps/${db}.SUCCESS ] && echo 'OK' || echo 'WAIT'"

STATUS=$(ssh ${ORIGIN_DB_HOST} ${REMOTE_COMMAND})

echo "."

sleep 60

done

# Start rsync at this point

...

...

##

Making RSYNC Faster

Reducing SSH encryption makes data transfer much faster, we saw improvements from 4-10x in transfer speed, this is achieved by -e "ssh -l ${USER} -i /home/smx/.ssh/id_rsa -T -c arcfour -o Compression=no -x" command. Thanks to @KartikTalwar document: Rsync over SSH - (40MB/s over 1GB NICs)

Also we had to access a filesystem location where sudo was required, it was achieved adding the parameter --rsync-path "sudo rsync"

The complete command line was:

rsync -aHAXxv --numeric-ids --delete \

--rsync-path "sudo rsync" \

--progress \

-e "ssh -l ${USER} -i /home/smx/.ssh/id_rsa -T -c arcfour -o Compression=no -x" \

${ORIGIN_DB_HOST}:/opt/dumps/${db}.sql.gz \

${DEST_DIR}/${db}.sql.gz

##

Improving MySQL restore rate

Finally the longest time spent on moving the databases where in restore phase.

A little bit about hardware, besides lots of memory, we found the best combination for high performance was using RAID10. We used Azure Premium Storage which is equivalent to SSD disks. We tried with HDD 15K disks but it didn’t give us the desired performance.

We also learned a few MySQL variables should be tunned to get the best performance:

innodb_buffer_pool_size = 4G

innodb_log_buffer_size = 256M

innodb_log_file_size = 1G

innodb_write_io_threads = 16

innodb_autoinc_lock_mode = 2

innodb_flush_log_at_trx_commit = 0

-

innodb_buffer_pool_size will cache frequently read data

-

innodb_log_buffer_size: Larger buffer reduces write I/O to Transaction Logs

-

innodb_log_file_size: Larger log file reduces checkpointing and write I/O

-

innodb_write_io_threads: Service Write Operations to .ibd files. According to MySQL Documentation on Configuring the Number of Background InnoDB I/O Threads, each thread can handle up to 256 pending I/O requests. Default for MySQL is 4, 8 for Percona Server. Max is 64.

-

innodb_autoinc_lock_mode: no “INSERT-like” statements use the table-level AUTO-INC lock, and multiple statements can execute at the same time.

-

innodb_flush_log_at_trx_commit:

- In the event of a crash, both 0 and 2 can lose once second of data.

- The tradeoff is that both 0 and 2 increase write performance.

- I choose 0 over 2 because 0 flushes the InnoDB Log Buffer to the Transaction Logs (ib_logfile0, ib_logfile1) once per second, with or without a commit. Setting 2 flushes the InnoDB Log Buffer only on commit.

This answer in DBA StackExchange summarizes beautifully all parameters.

##

NOTE:

Don’t forget to change innodb_flush_log_at_trx_commit and innodb_autoinc_lock_mode to its default after migration is completed

.